Everyone has heard of AI. Even non-geeks may have used Midjourney, which was arguably the best image generation AI tool — until now.

StabilityAI has released Stable Diffusion XL, the latest version of their popular, permissively-licensed image generation tool. Consisting of both code and the pre-trained models, what makes Stable Diffusion stand out is that it is completely open-source and can be run on consumer hardware.

Although there are a plethora of online tools to get started quickly, this is a moderately technical account of a self-hosted install, and diving deeper into the details behind the latest trend in technology.

What is Latent Diffusion?

I’ve tinkered with python and “AI” tools since circa 2013, with early NLP (Natural Language Processing) libraries. Combined with my background in CompSci, I spend a lot of time reading whitepapers and gaining a deep fundamental knowledge of how new technology works.

I won’t bore you with the gory details — weighted models, training sets, bias, VAE, tensors, and LLMs. I have only scratched the surface on what is arguably the bleeding-edge of mathematics.

Don’t Cross the Picket Line

There’s a lot of consternation regarding AI — the broad societal and technical repercussions are valid concerns, for example, of replacing artists, actors, and others with automated AI. We proudly support fellow artists, and my goal in using these tools are to demonstrate how we can empower normal people — not disenfranchise them.

Setup

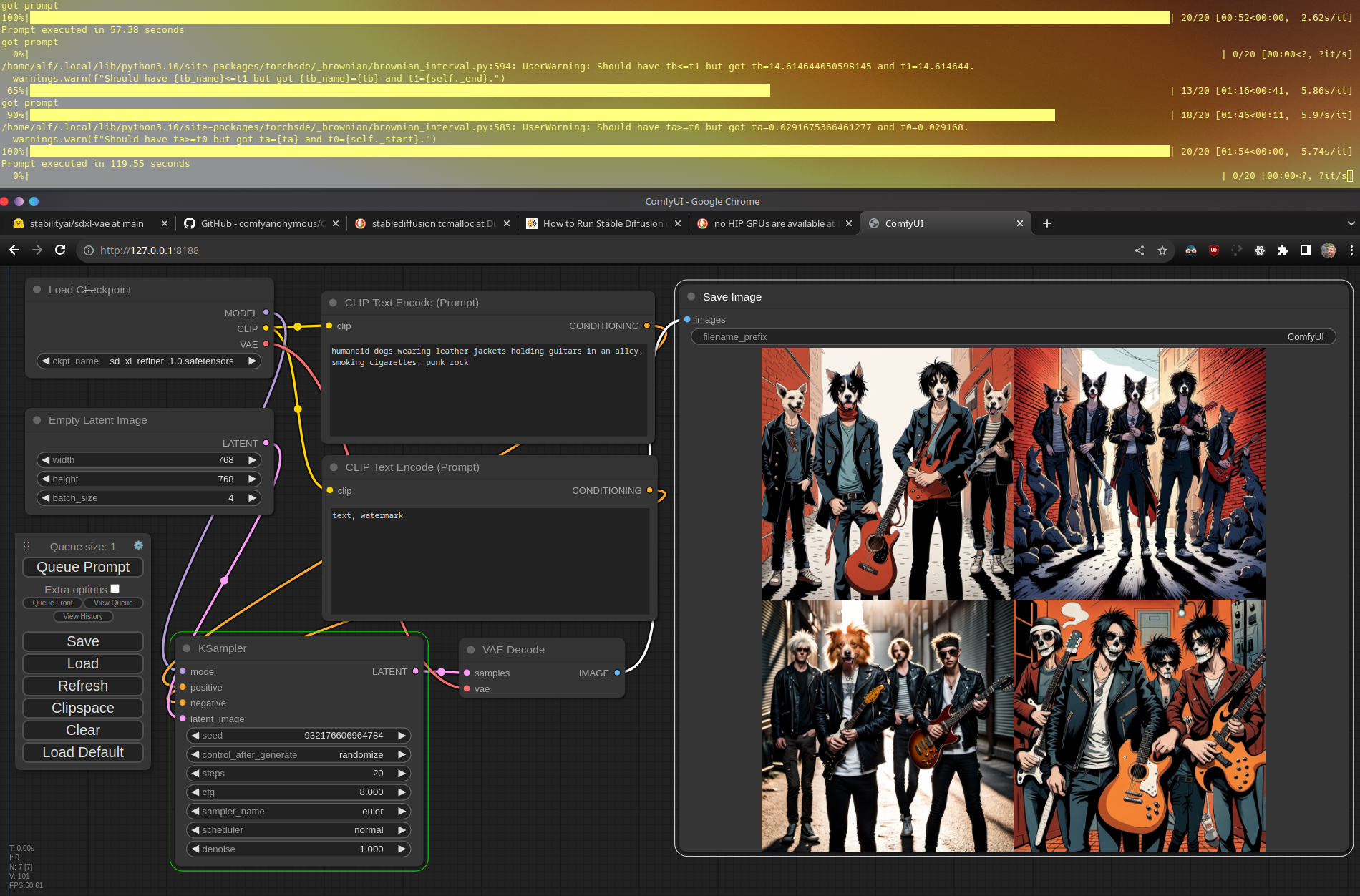

Installing AI tools on your computer is not straightforward — there are tons of requirements, such as graphics capability (what do you mean 8GBs of VRAM isn’t enough?), comfort with python and pip terminal tooling, and a fair bit of free space (20GB+), not to mention display wrappers (ComfyUI vs Automatic1111) and parameter building (not just prompt engineering, which SDXL claims they largely eliminated the need for in this latest model via their VAE).

Linux makes this stuff pretty easy, and my modest setup should have enough power to run this — I figure, I can run CyberPunk 2077, how hard can it be? (i7-118k, 32gb ddr4, 2tb nvme, nvidia 3060 w 8GB VRAM).

I installed both Stability AI code from their github directly, as well as the base, refiner, and VAE models from HuggingFace. I already had A1111 installed, and importing the models was a breeze. However, I wanted to try out ComfyUI, especially with my comfort with JACK style linux audio patching.

Some python installing requirements, changing my PRIME profile from on-demand to performance, and a couple of tweaked parameters (medvram, full precision, etc) and it was all ready to go. Pretty standard stuff with pytorch and scientific packages.

I ran a couple of prompts — with no engineering — and was pleasantly surprised at the results. Although perhaps a little more fursona-esque than I intended (was going for punk rock and didn’t specify the style), for an out-of-the-box config, I was pleasantly surprised.

The usual array of styles, polydactyl hands and broken lines were on display. However, there’s a lot of flexibility and tools here we haven’t even explored — such as in-painting, using the VAE to fix, different encoders etc.

Stay Tuned: Pushing the Limits

In short, ComfyUI was much more powerful than A1111, but there are some things that A1111 makes super easy. In future posts, we’ll go over some of the details and how to do this for yourself, as well as the technical and mathematical underpinnings.

Christopher “Owen” Owens is an avid geek, proudly supporting open-source software, Linux, and all things web. When AFK, you can find him thrashing on his ukulele or connecting with nature.